Despite concerns about accuracy, bias, and « hallucinations » in outputs, journalists and fact-checkers are increasingly using generative AI (GAI) tools. This research note highlights the importance of effective risk mitigation strategies, including (G)AI literacy, ethical practices, and the development of advanced prompting strategies.

UPDATED: 15 APRIL 2025

Technology has always been a part of the journalist’s apparatus, demonstrating a profession to adapt quickly to new tools and techniques that strengthen their professional routines and workflows. Although it is impossible to precisely quantify the extent to which generative AI (GAI) technologies have permeated these practices since the launch of ChatGPT in late November 2022, exploratory research and surveys in the field have shown a high level of engagement in newsrooms with the use of these technologies (Caswell, 2024: Cools & Diakopoulos, 2024; Cuartielles et al., 2023). One of the most solid indicators of this commitment is the number of news organisations that have adopted guidelines to frame the responsible use of AI. At the University of Bergen, we found 36 texts from news and professional organisations in Western and Northern Europe, including two ethical journalism codes that have been updated to reflect concerns related to GAI systems (Dierickx & Lindén, 2025). Before ChatGPT, the use of AI technologies in journalism was not considered critical by most European news media industry players, including self-regulatory bodies (Porlezza, 2024).

Concerns on accuracy and hallucinations

GAI systems, and large language models (LLMs) in particular, are not without limitations and drawbacks when it comes to journalism and fact-checking, a sub-genre of journalism characterised by robust methodological foundations and narrative transparency which consists of verifying facts after they have been published (Dierickx et al., 2024: Jones et al., 2023). One of the major concerns relates to the potential for plagiarism, as the reliance on copyrighted data raises questions about the ownership and originality of the content produced. LLMs learn from patterns and associations found in their training data, which often includes a vast array of copyrighted material. Furthermore, LLMs do not adequately understand the content they generate. Therefore, they cannot discern the nuanced distinctions between original ideas and those derived from pre-existing sources. In addition, LLMs lack the capacity for logical reasoning and ethical judgement as they do not understand the content and context they are producing. Other concerns relate to the nature of their training data, which were massively collected from the web, including ideologically biased data and user-generated content, which potential issues on accuracy and reliability are two major obstacles for being used in journalism and fact-checking. Consequently, LLMs are likely to generate biased and inaccurate content derived from unreliable sources, as the systems are not equipped to assess these features (e.g. Augenstein et al., 2024; Berglund et al., 2023; Dwivedi et al., 2023; Li 2023).

In addition, LLMs systems are prone to « artificial hallucinations », producing content that does not correspond to factual or verified information. This phenomenon is well documented in LLMs due to a combination of factors, including the large amount of training data and the complexity of the models’ processing mechanisms. Due to the models’ ability to generate plausible text based on patterns learned from their training data, hallucinations are not merely bugs. Instead, they also reflect a lack of reasoning and a focus on producing text that appears true rather than accurate (e.g. Beutel et al., 2023; Hicks et al., 2024; Rawte et al., 2023; Ji et al., 2023). Therefore, it increases the likelihood of producing content that deviates from the ground truth, further complicating their use in journalism and fact-checking (Dierickx et al., 2023).

Prompting as a risk mitigation strategy

Despite their shortcomings and drawbacks, AI systems have rapidly gained acceptance in newsrooms, raising concerns about the need for effective risk mitigation strategies that ensure responsible use and accountable practices. To address these challenges, during my fellowship at the Digital Democracy Centre (SDU, Denmark) and my postdoctoral work at the University of Bergen, I and my colleagues have developed a framework for responsible use of AI in journalism and fact-checking that includes three interlocking and complementary strategies: (1) promoting GAI literacy to understand how these systems work and their limitations; (2) promoting ethics rooted in human responsibility to ensure that human oversight remains integral to the editorial process; and (3) developing prompting strategies to improve the quality of the output produced (Dierickx et al. , 2024). This framework is reflected in an integrative approach to journalism education and training in AI that includes substantive, ethical and technical skills (Lopesoza et al., 2023).

Prompt engineering involves the design of natural language prompts to enhance user interaction with LLMs, allowing engagement without the need for advanced technical skills. Prompts act as instructions to an LLM to enforce rules, automate processes and ensure desired qualities in the output generated. In computer science, prompts function as a form of programming that customises interactions with an LLM (Marvin et al., 2023; White et al., 2023). Research has shown that well-designed prompts can increase explainability and reduce the generation of fabricated content.

Prompt engineering helps the model understand the specific task it is expected to perform, providing accurate and relevant responses and effectively adapting the model to the task at hand. This process involves defining the types of tasks suitable for processing with LLMs.

Prompting skills for non-experts

As journalists and fact-checkers are non-expert users, prompting often equates to a try-error process, whereas a well-designed prompt should provide clear guidance and facilitate task completion. It also requires complex reasoning to examine the model’s errors, hypothesise what is missing or misleading in the current prompt and clearly communicate the task. Therefore, while it may look simple and intuitive, it relies on complex strategies to achieve accurate results (Ye et al., 2023). The more precise the task or directive provided, the more effectively the model performs, aligning its responses more closely with the user’s expectations (Bsharat et al., 2023). It is also acknowledged that optimal prompting meets the needs of users (Henrickson & Meroño-Peñuela, 2023).

Prompting techniques as used by non-experts facilitate user interaction and problem-solving, for example, by providing context in the prompt, and are a promising way to improve the accuracy, reliability and overall quality of the outcomes. Research suggests that creative and contextual prompting techniques also reduce the generation of misleading content. Furthermore, this approach contributes to AI literacy and system explainability, helping users to understand the basis of the system’s decision-making processes (e.g., Feldman et al., 2023; Knoth et al., 2024; Lee et al., 2024; Lo, 2023). Effective prompting techniques combine content knowledge, critical thinking, iterative design, and a deep understanding of LLMs’ capabilities (Bozkurt & Sharma, 2024; Cain, 2023; Walter, 2024).

UNESCO’s first global guidance on GenAI in education recognises that prompting is not a straightforward process and that more sophisticated outputs need skilled human input that must be critically evaluated (Miao & Holmes, 2023). At the same time, prompting techniques are considered a new fundamental digital competence (Korzynski et al., 2023).

A framework for journalism and fact-checking

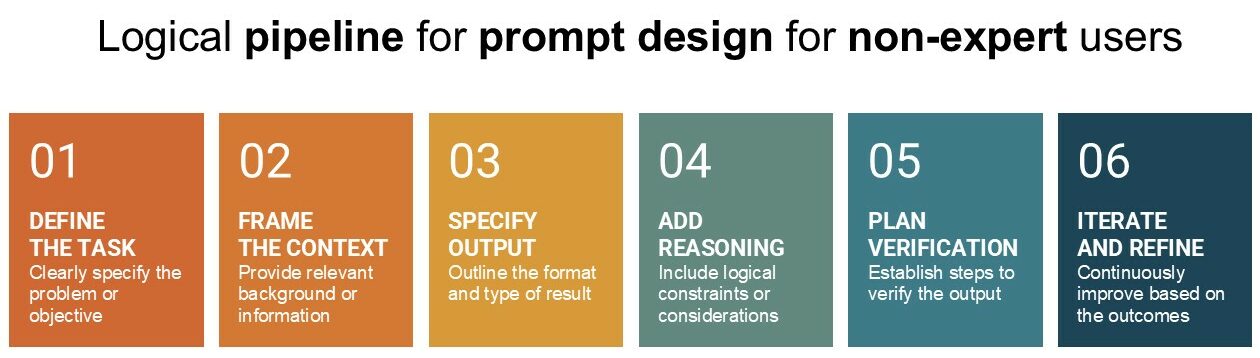

The literature on prompting offers valuable guidance on the elements that contribute to an effective prompt. While these frameworks provide general principles, they can also be adapted to specific tasks such as in fact-checking. For instance, prompt design begins with clearly defining the task by specifying the problem or objective to be solved. The context should then be established, providing relevant background or additional information to guide the model. Next, the output must be clearly specified, outlining the expected format and type of result required. Reasoning should follow to address any specific logical constraints or considerations essential to the task. Verification planning is crucial, ensuring the output meets expectations and complies with ground-truth, an especially critical aspect in fact-checking. Finally, the prompt design process should be iterative, continually refined through the evaluation of previous results to enhance accuracy and relevance.

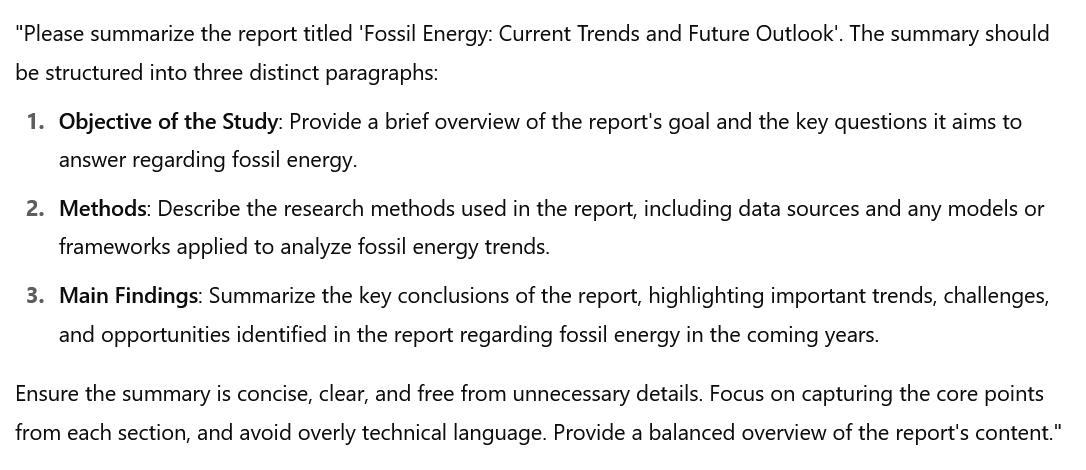

Such a pipeline only allows you to understand the components of the prompts. For example, if I want to write a summary of a report on fossil energy, I first need to define the task (the summary), frame the context (who published this report and when), specify the type of output expected (e.g. one paragraph on the aim of the study, another on the methods, another on the main findings), and add a rationale to ensure clarity in the distinctions between sections. However, while this structure helps to break down the process, it does not provide the practical steps or concrete methods needed to write the prompt itself. In order to produce the summary effectively, I need to determine how to write each section clearly and concisely, decide on the level of detail required for each part, and ensure that the model understands the need for a balanced overview without overloading it with unnecessary information. In addition, this iterative approach requires ongoing testing and refinement to address any potential gaps or misalignments in the output, especially when tailored for non-experts.

While the theoretical pipeline provides a useful framework, non-expert users often need more specific guidance, such as step-by-step examples, to avoid the trial-and-error process associated with rapid design. At the same time, some work has also highlighted the importance of meta-prompting, recognising that the best teachers can sometimes be the models themselves (e.g. de Wynter et al., 2023). I therefore asked ChatGPT to generate an initial prompt for summarising a report on fossil energy, based on this logical pipeline.

References

Augenstein, I., Baldwin, T., Cha, M., Chakraborty, T., Ciampaglia, G. L., Corney, D., … & Zagni, G. (2024). Factuality challenges in the era of large language models and opportunities for fact-checking. Nature Machine Intelligence, 1-12.

Berglund, L., Tong, M., Kaufmann, M., Balesni, M., Stickland, A. C., Korbak, T., & Evans, O. (2023). The reversal curse: LLMs trained on » a is b » fail to learn » b is a ». arXiv preprint arXiv:2309.12288.

Beutel, G., Geerits, E., & Kielstein, J. T. (2023). Artificial hallucination: GPT on LSD?. Critical Care, 27(1), 148.

Bozkurt, A., & Sharma, R. C. (2023). Generative AI and prompt engineering: The art of whispering to let the genie out of the algorithmic world. Asian Journal of Distance Education, 18(2), i-vii.

Bsharat, S. M., Myrzakhan, A., & Shen, Z. (2023). Principled instructions are all you need for questioning llama-1/2, gpt-3.5/4. arXiv preprint arXiv:2312.16171.

Cain, W. (2024). Prompting change: exploring prompt engineering in large language model AI and its potential to transform education. TechTrends, 68(1), 47-57.

Caswell, D. (2024). Audiences, automation, and AI: From structured news to language models. AI Magazine.

Cools, H., & Diakopoulos, N. (2024). Uses of generative ai in the newsroom: Mapping journalists’ perceptions of perils and possibilities. Journalism Practice, 1-19.

Cuartielles, R., Ramon-Vegas, X., & Pont-Sorribes, C. (2023). Retraining fact-checkers: The emergence of ChatGPT in information verification. Profesional de la Información, 32(5).

Dierickx, L., & Lindén, C.-G. (2025). Intelligence artificielle et journalisme : Des règles pour garantir la qualité de l’information. In Actes du 9e Colloque Document Numérique et Société: Information et IA. Louvain-la-Neuve (BE): De Boeck supérieur.

Dierickx, L., Van Dalen, A., Opdahl, A. L., & Lindén, C. G. (2024, August). Striking the Balance in Using LLMs for Fact-Checking: A Narrative Literature Review. In Multidisciplinary International Symposium on Disinformation in Open Online Media (pp. 1-15). Cham: Springer Nature Switzerland.

Dierickx, L., Lindén, C. G., & Opdahl, A. L. (2023, November). The Information Disorder Level (IDL) Index: A Human-Based Metric to Assess the Factuality of Machine-Generated Content. In Multidisciplinary International Symposium on Disinformation in Open Online Media (pp. 60-71). Cham: Springer Nature Switzerland.

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., … & Wright, R. (2023).“So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. International Journal of Information Management, 71, 102642.

Feldman, P., Foulds, J. R., & Pan, S. (2023). Trapping llm hallucinations using tagged context prompts. arXiv preprint arXiv:2306.06085.

Henrickson, L., & Meroño-Peñuela, A. (2023). Prompting meaning: a hermeneutic approach to optimising prompt engineering with ChatGPT. AI & Society, 1-16.

Hicks, M. T., Humphries, J., & Slater, J. (2024). ChatGPT is bullshit. Ethics and Information Technology, 26(2), 38.

Ji, Z., Lee, N., Frieske, R., Yu, T., Su, D., Xu, Y., … & Fung, P. (2023). Survey of hallucination in natural language generation. ACM Computing Surveys, 55(12), 1-38.

Jones, B., Luger, E., & Jones, R. (2023). Generative AI & journalism: A rapid risk-based review. Edinburgh Research Explorer, University of Edinburgh.

Knoth, N., Tolzin, A., Janson, A., & Leimeister, J. M. (2024). AI literacy and its implications for prompt engineering strategies. Computers and Education: Artificial Intelligence, 6, 100225.

Korzynski, P., Mazurek, G., Krzypkowska, P., & Kurasinski, A. (2023). Artificial intelligence prompt engineering as a new digital competence: Analysis of generative AI technologies such as ChatGPT. Entrepreneurial Business and Economics Review, 11(3), 25-37.

Lee, U., Jung, H., Jeon, Y., Sohn, Y., Hwang, W., Moon, J., & Kim, H. (2024). Few-shot is enough: exploring ChatGPT prompt engineering method for automatic question generation in english education. Education and Information Technologies, 29(9), 11483-11515.

Lo, L. S. (2023). The CLEAR path: A framework for enhancing information literacy through prompt engineering. The Journal of Academic Librarianship, 49(4), 102720.

Lopezosa, C., Codina, L., Pont-Sorribes, C., & Vállez, M. (2023). Use of generative artificial intelligence in the training of journalists: challenges, uses and training proposal. Profesional de la información, 32(4).

Marvin, G., Hellen, N., Jjingo, D., & Nakatumba-Nabende, J. (2023, June). Prompt engineering in large language models. In International conference on data intelligence and cognitive informatics (pp. 387-402). Singapore: Springer Nature Singapore.

Miao, F., & Holmes, W. (2023, September 7). Guidance for generative AI in education and research. UNESCO. https://www.unesco.org/en/articles/guidance-generative-ai-education-and-research

Porlezza, C. (2024). The datafication of digital journalism: A history of everlasting challenges between ethical issues and regulation. Journalism, 25(5), 1167-1185.

Rawte, V., Sheth, A., & Das, A. (2023). A survey of hallucination in large foundation models. arXiv preprint arXiv:2309.05922.

Walter, Y. (2024). Embracing the future of Artificial Intelligence in the classroom: the relevance of AI literacy, prompt engineering, and critical thinking in modern education. International Journal of Educational Technology in Higher Education, 21(1), 15.

White, J., Fu, Q., Hays, S., Sandborn, M., Olea, C., Gilbert, H., … & Schmidt, D. C. (2023). A prompt pattern catalog to enhance prompt engineering with chatgpt. arXiv preprint arXiv:2302.11382.

Ye, Q., Axmed, M., Pryzant, R., & Khani, F. (2023). Prompt engineering a prompt engineer. arXiv preprint arXiv:2311.05661.