They are not trusted, but they are used. They are not entirely reliable and accurate, but journalists are experimenting daily with large language models (LLMs) that are likely to be used at every stage of the news process. After a series of blog posts aimed at identifying where AI is being used in the production and distribution workflows, and defining a set of twenty tasks that can be used with LLMs – based on task definitions in machine learning and natural language processing – this blog post aims to practically outline how LLMs can support different stages in journalism process, assess the risks at each step, and suggest strategies for safe and meaningful use.

This attempt to review practices and analyse risks is inspired by a consequentialist ethical approach, which evaluates practices according to their impact on information quality.

Supporting story discovery, document exploration, and early research

Risk Level: Moderate to high

LLMs help journalists scan news sources, forums, databases, and social media for emerging topics. They assist in exploring large sets of documents by extracting entities, detecting patterns, and summarising content, which makes mining and early analysis more accessible. However, the risks here are substantial. LLMs do not « understand » material; instead, they identify patterns statistically, which means they might miss key insights, overlook contradictions, or highlight superficial trends. Complex investigative leads could be ignored. Over-reliance on AI may flatten narratives, introduce bias, or lead to shallow interpretations.

Research and interview preparation

Risk Level: Low to moderate

LLMs help brainstorm broader perspectives, including compiling background briefings, suggesting expert contacts, anticipating interview questions, and simulating potential dialogues. However, the main risks involve outdated, incomplete, or biased suggestions. Therefore, there is a need for independently verifying sources or background information, as erroneous or skewed insights may be embedded early in the process. Moreover, since LLMs merely reproduce patterns rather than generate original ideas, they should be seen as an instrument to stimulate creativity rather than rely on them for novel insights. On a more practical level, using LLMs with sensitive or confidential information is not recommended due to security concerns.

Drafting and refinement

Risk Level: Moderate to high

LLMs assist in structuring drafts, suggesting headlines, and exploring stylistic variations. They can propose multiple narrative approaches quickly, helping journalists transition from idea to written content faster. However, the risk of « hallucination » — the confident fabrication of false information — is a persistent problem. AI-generated outputs can also reflect hidden biases, prioritise specific framings over others, or fail to deliver a balanced narrative. Thus, human journalists rework any text generated by an LLM to ensure factual accuracy, consistency, and stylistic integrity.

Editing, fact-checking, and verification

Risk Level: High

While LLMs can suggest clarifications, detect inconsistencies, or propose potential fact-checking targets, they should never be relied upon as verifiers. LLMs are prone to inventing quotes, misrepresenting facts, or subtly distorting the context of information. Every factual claim flagged by AI must be independently verified through primary sources, trusted references, or direct reporting. Editorial oversight at this stage is non-negotiable. Furthermore, LLMs will likely create additional work, as fact-checking generated content often requires more scrutiny and correction. While they can assist in assessing collected evidence, they cannot replace thorough, human-driven verification.

Final editing, language checking, and risk of misinterpretation

Risk Level: High

LLMs can support final editing by checking grammar, punctuation, and style, but they struggle with more nuanced aspects of language, particularly when context is essential. They often fail to grasp polysemy (words with multiple meanings) fully or homonyms (exact words but with different meanings), leading to potential misinterpretation or ambiguity. Their lack of contextual understanding means they might miss how specific phrases, idioms, or terminology should be used in a particular journalistic context. Human review is therefore essential to ensure that meanings are conveyed as intended, avoiding errors that could lead to confusion or misrepresentation of the story.

Publishing and distribution

Risk Level: Low to Moderate

LLMs can help optimise content for SEO, generate catchy headlines, create social media posts, and draft meta descriptions, thereby maximising the reach and visibility of stories. Here, the risks are lower, but they still exist as LLMs can unintentionally foster sensationalism, clickbait tactics, or misframe the ethical context of a story. Therefore, human editorial oversight is needed to ensure that promotional materials stay true to the story’s integrity, avoiding ethical pitfalls or misleading impressions.

Audience engagement and feedback analysis

Risk Level: Low

After publication, LLMs can summarise reader comments, detect emerging reactions, and suggest follow-up topics. This allows journalists to monitor audience sentiment without manually sifting through large volumes of feedback. However, AI misinterpreting sarcasm, irony, or polarised language can lead to incorrect assessment of audience sentiment. Thus, while AI-generated insights can be helpful, they should be taken as guidance rather than definitive answers, with humans interpreting and acting on the data cautiously.

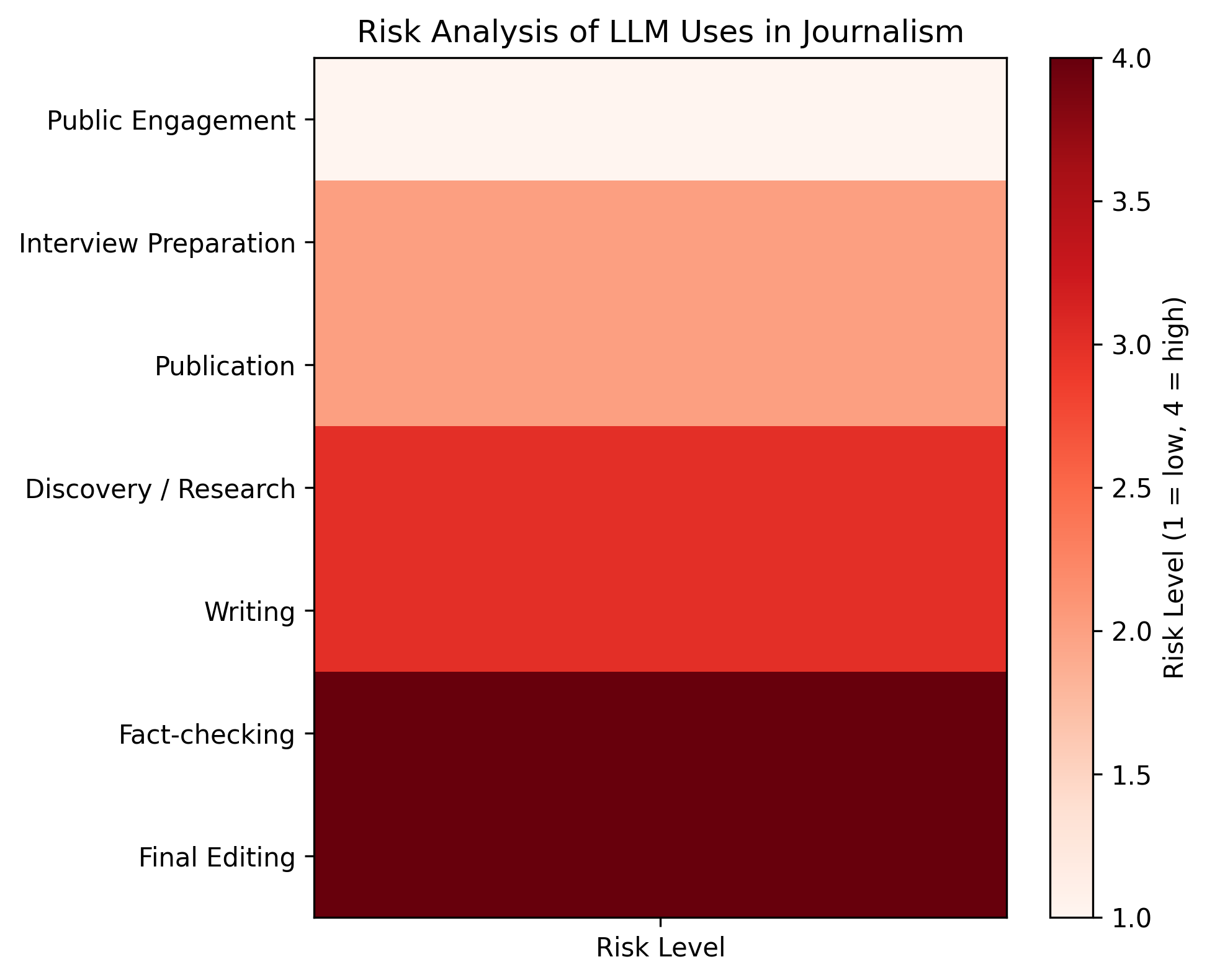

Risk Matrix: Stage-based risk assessment

| Process Step | Risk Level | Risk Mitigation |

|---|---|---|

| Story discovery and preliminary research | Moderate to high | – Critical verification of information – Cross-checking and diversifying sources – Treat AI as a starting point |

| Interview preparation and research | Low to moderate | – Verify the timeliness and relevance of questions – Human rephrasing to avoid bias and stereotypes – Do not use sensitive/confidential data |

| Drafting and writing enhancement | Moderate to high | – Systematic editorial oversight – Fact-checking – Human rewriting to maintain stylistic and narrative consistency |

| Verification and editing (fact-checking) | High | – Confirm each element with reliable primary sources – Do not treat the LLM as an autonomous fact-checker – Essential human supervision |

| Final editing and linguistic review | High | – Human proofreading to ensure meaning and nuance – Control of ambiguities (homonyms, polysemy, idioms) – Validation of stylistic consistency and integrity |

| Publication and distribution | Low to moderate | – Verify the accuracy of promotional content – Review headlines, hooks, and meta descriptions to avoid sensationalism and clickbait – Human oversight to ensure editorial integrity |

| Audience engagement and comment analysis | Low | – Cautious interpretation of sentiment analysis – Human review to detect sarcasm or irony – Use results as indicators, not as definitive truths |

Références

- Augenstein, I., Baldwin, T., Cha, M., Chakraborty, T., Ciampaglia, G. L., Corney, D., … & Zagni, G. (2024). Factuality challenges in the era of large language models and opportunities for fact-checking. Nature Machine Intelligence, 1-12.

- Barrow, N. (2024). Anthropomorphism and AI hype. AI and Ethics, 1-5.

- Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021, March). On the dangers of stochastic parrots: Can language models be too big?🦜. In Proceedings of the 2021 ACM conference on fairness, accountability, and transparency (pp. 610-623).

- Dierickx, L. (2025). Journalisme et devoir de vérité à l’épreuve de la désinformation et des contenus générés. I2D-Information, données & documents, (1), 31-38.

- Dierickx, L. (2025). Le fait émergent, ou (re)penser ce qui fait le fait. In Bienvenue en post-réalité. Meta-Media Cahier de Tendances de France Télévisions.

- Dierickx, L., Van Dalen, A., Opdahl, A. L., & Lindén, C. G. (2024). Striking the balance in using LLMs for fact-checking: A narrative literature review. In Multidisciplinary International Symposium on Disinformation in Open Online Media (pp. 1-15). Cham: Springer Nature Switzerland.

- Dierickx, L., Lindén, C. G., & Opdahl, A. L. (2023). The information disorder level (IDL) index: a human-based metric to assess the factuality of machine-generated content. In Multidisciplinary international symposium on disinformation in open online media (pp. 60-71). Cham: Springer Nature Switzerland.

- Floridi, L. (2024). Hypersuasion–On AI’s persuasive power and how to deal with it. Philosophy & Technology.

- Floridi, L. (2024). Why the AI Hype is Another Tech Bubble. Philosophy & Technology, 37(4).

- Helal, M. Y., Elgendy, I. A., Albashrawi, M. A., Dwivedi, Y. K., Al-Ahmadi, M. S., & Jeon, I. (2025). The impact of generative AI on critical thinking skills: a systematic review, conceptual framework and future research directions. Information Discovery and Delivery.

- Hicks, M. T., Humphries, J., & Slater, J. (2024). ChatGPT is bullshit. Ethics and Information Technology, 26(2), 38.

- Leaver, T., & Srdarov, S. (2023). ChatGPT Isn’t Magic: The Hype and Hypocrisy of Generative Artificial Intelligence (AI) Rhetoric. M/C Journal, 26(5).

- Malmqvist, L. (2025, June). Sycophancy in large language models: Causes and mitigations. In Intelligent Computing-Proceedings of the Computing Conference (pp. 61-74). Cham: Springer Nature Switzerland.

- Placani, A. (2024). Anthropomorphism in AI: hype and fallacy. AI and Ethics, 1-8.

- Runco, M. A. (2023). AI can only produce artificial creativity. Journal of Creativity, 33(3), 100063.