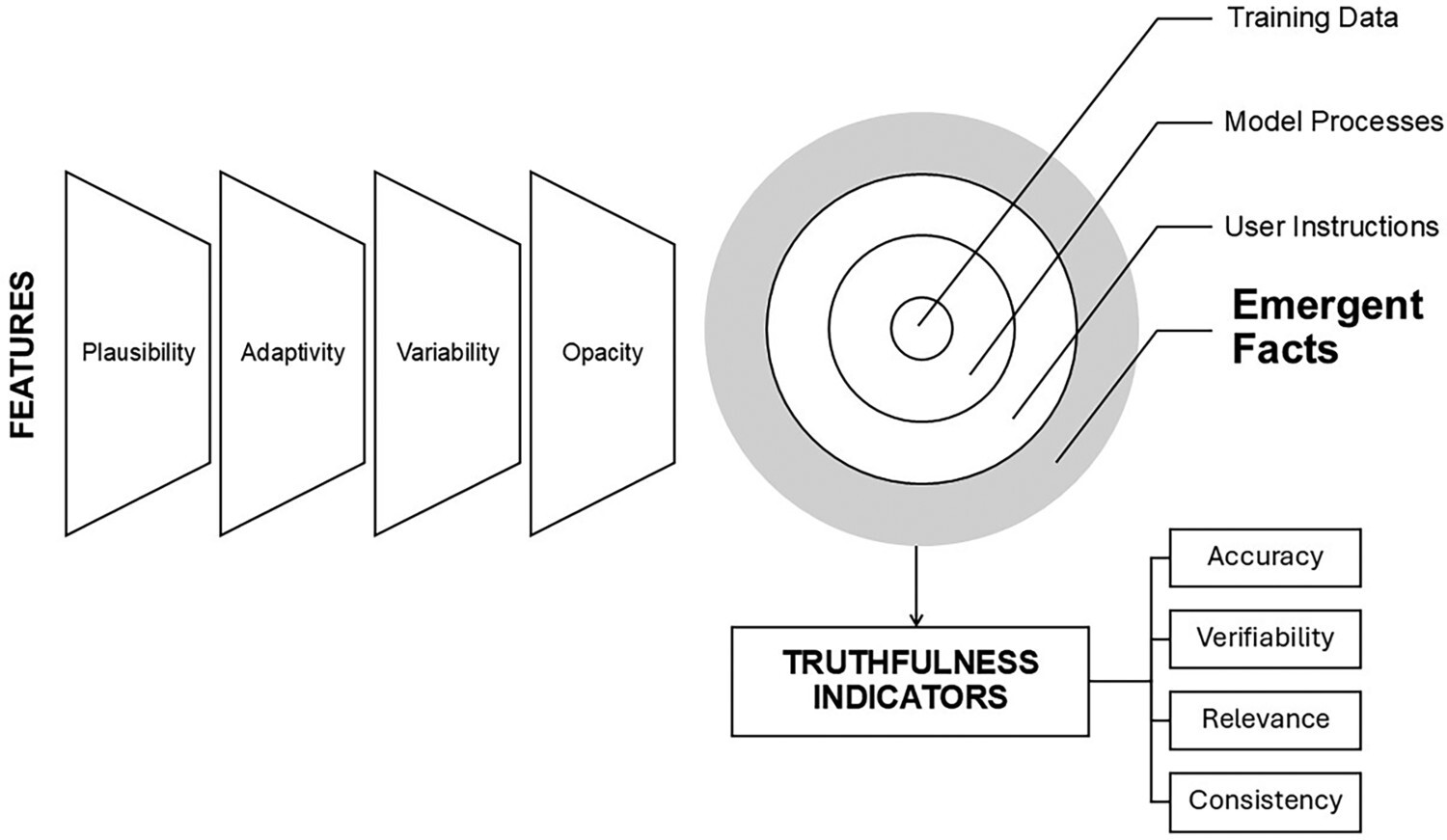

Generative AI systems produce outputs that are coherent and contextually plausible yet not necessarily anchored in empirical evidence or ground truth. This challenges traditional notions of factuality and prompts a revaluation of what counts as a fact in computational contexts. This paper offers a theoretical examination of AI-generated outputs, employing fact-checking as an epistemic lens. It analyses how three categories of facts – evidence-based facts, interpretative-based facts and rule-based facts – operate in complementary ways, while revealing their limitations when applied to AI-generated content. To address these shortcomings, the paper introduces the concept of emergent facts, drawing on emergence theory in philosophy and complex systems in computer science. Emergent facts arise from the interaction between training data, model architecture, and user prompts; although often plausible, they remain probabilistic, context-dependent, and epistemically opaque. By articulating this framework, the study advances the epistemology of verification and proposes a structured approach to evaluating AI outputs, offering a novel contribution to ongoing debates in epistemology, AI ethics, and media studies at a moment when the nature of factuality is being fundamentally renegotiated.